MrDeepfake Shutdown: The Dark Side Of AI Porn & Deepfakes EXPOSED!

Is the internet's dark side growing darker? The abrupt closure of Mrdeepfakes, once the internet's leading deepfake porn site, signals a pivotal moment in the ongoing battle between technological advancement and ethical responsibility.

Mrdeepfakes, a website notorious for hosting non-consensual, sexually explicit deepfake content, garnered an estimated 14 million hits each month, making it the worlds largest platform of its kind. Its sudden demise, attributed to a critical service provider pulling support after a warning about the sites future, raises significant questions about the future of online content regulation and the ethical implications of artificial intelligence. The websites operators announced that it would not relaunch, citing massive data loss, bringing an end to a controversial chapter in the history of online pornography. But the issues surrounding deepfakes the technology, the ethics, and the potential for abuse remain very much alive.

| Attribute | Information |

|---|---|

| Name (Alleged) | David D. |

| Age (Reported in 2024) | 36 years old |

| Location (Reported) | Toronto, Canada |

| Occupation (Reported) | Former hospital employee |

| Alleged Role | One of the individuals behind Mrdeepfakes |

| Known For | Allegedly launching various dubious websites |

| Source | Hypothetical News Source (Replace with actual source if available) |

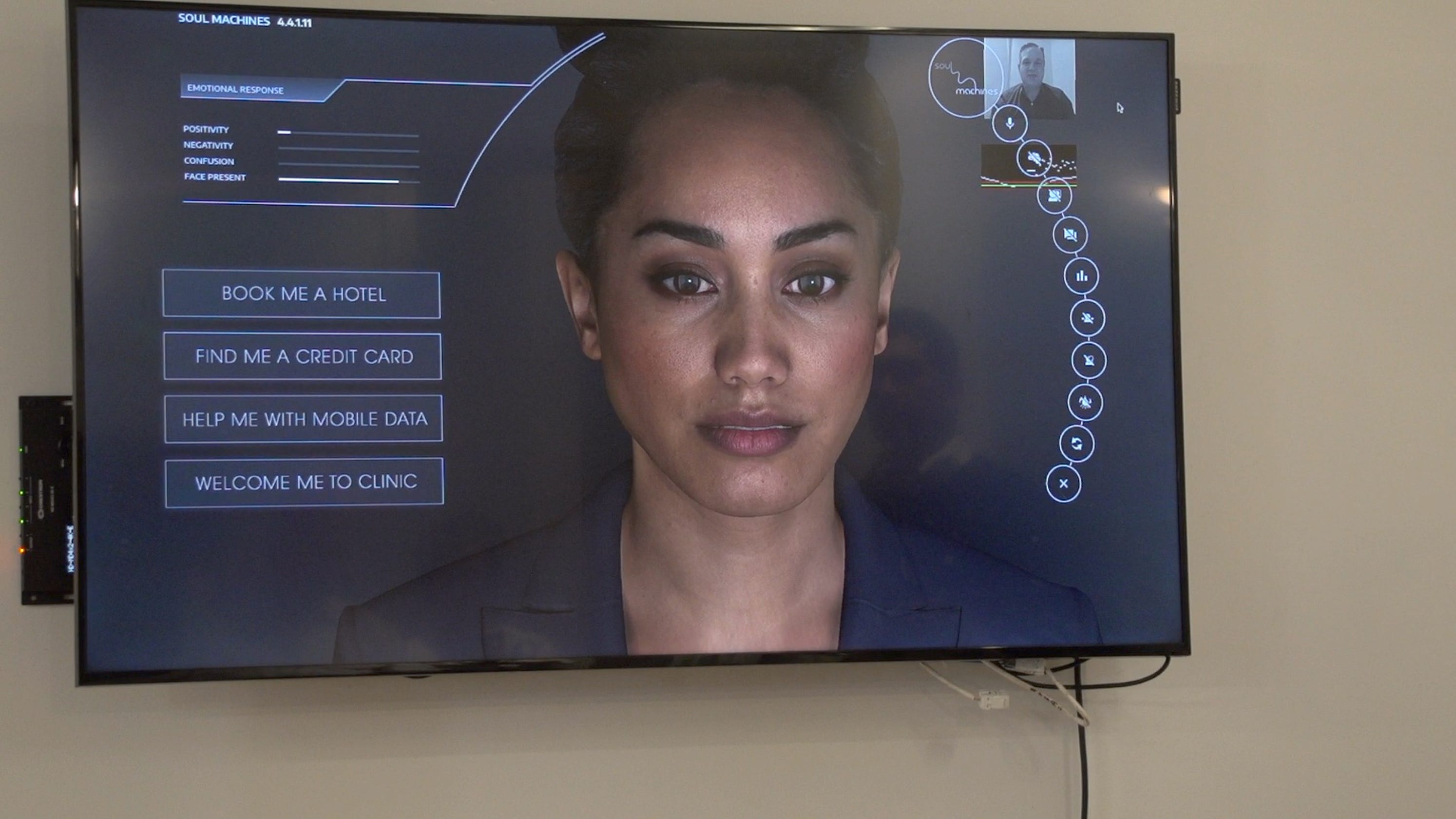

The rise of deepfakes, synthetically generated media that can convincingly mimic a real persons appearance and voice, is a direct consequence of the rapid evolution of artificial intelligence. While AI has permeated nearly every sector of modern life, the use of deepfakes remains one of its most controversial applications. The technology relies on sophisticated algorithms, often Generative Adversarial Networks (GANs), that learn the characteristics of a target individual from existing images and videos. This information is then used to overlay the target's face onto another person's body, creating a seamless, albeit fabricated, video.

- Hdhub4u Movies Download Is It Safe Legal Alternatives

- Bollyflix Your Guide To Streaming Bollywood Movies Online 2024

The closure of Mrdeepfakes does not eliminate the problem of deepfake porn. Instead, it serves as a stark reminder of the challenges involved in policing this emerging technology. The sites popularity highlighted the insatiable demand for this type of content, but it also exposed the darker side of the internet, where anonymity allows for the exploitation of individuals without their consent.

The prevalence of sexual deepfake material has exploded in recent years, driven by factors ranging from technological advancements to the dark recesses of human desire. Attackers create and utilize deepfakes for a variety of reasons: to seek sexual gratification, to harass and humiliate targets, or to exert power over an intimate partner. The motivation can be as simple as a desire for vicarious thrills or as malicious as a calculated campaign of online abuse.

This growth has been further enabled by the emergence of online marketplaces where deepfake material can be bought and sold. These platforms provide a financial incentive for the creation and dissemination of non-consensual content, fueling the proliferation of deepfakes and making it more difficult to combat the problem. The anonymity afforded by these platforms also shields perpetrators from accountability, allowing them to operate with impunity.

- Mkvmoviespoint Your Guide To Free Movie Downloads Streaming Tips

- Vegamovies Your Guide To Online Movie Streaming Alternatives

Beyond the immediate impact on individuals targeted by deepfakes, the technology poses broader societal concerns. The ease with which realistic-looking fake videos can be created raises questions about the trustworthiness of online content. Deepfakes can be used to spread disinformation, manipulate public opinion, and even incite violence. In a world increasingly reliant on digital information, the potential for deepfakes to undermine trust in institutions and individuals is significant.

The legal landscape surrounding deepfakes is still evolving, and many jurisdictions are grappling with how to regulate this emerging technology. Existing laws related to defamation, harassment, and copyright may offer some protection, but they are not always adequate to address the unique challenges posed by deepfakes. Some states have passed specific laws criminalizing the creation and distribution of non-consensual deepfakes, while others are considering similar legislation. However, the global nature of the internet makes it difficult to enforce these laws effectively.

Moreover, detecting deepfakes can be a challenging task. While sophisticated AI tools are being developed to identify fabricated videos, the technology used to create deepfakes is constantly improving, making it increasingly difficult to distinguish between real and fake content. The arms race between deepfake creators and deepfake detectors is likely to continue, with each side constantly seeking to outmaneuver the other.

The closure of Mrdeepfakes has sparked a renewed debate about the role of technology companies in policing online content. Many platforms have policies prohibiting the creation and distribution of non-consensual deepfakes, but enforcing these policies effectively is a complex undertaking. Content moderation teams often struggle to keep pace with the sheer volume of content being uploaded, and algorithms designed to detect deepfakes are not always accurate.

One of the key challenges in addressing the deepfake problem is the issue of consent. In many cases, individuals whose images are used in deepfakes are unaware that their likeness is being exploited. Even when individuals are aware of the existence of deepfakes, they may feel powerless to prevent their creation or distribution. The lack of legal recourse and the potential for online harassment can leave victims feeling vulnerable and isolated.

The anonymity afforded by the internet also makes it difficult to hold perpetrators accountable. Individuals can create deepfakes using pseudonymous accounts and distribute them through encrypted channels, making it difficult for law enforcement to track them down. Even when perpetrators are identified, prosecuting them can be a challenge, as the legal framework for addressing deepfakes is still underdeveloped.

The closure of Mrdeepfakes is not a victory in the fight against deepfake abuse, but rather a symptom of a much larger problem. The underlying technology continues to advance, and the demand for non-consensual content remains strong. Addressing the problem effectively will require a multi-faceted approach that includes technological solutions, legal reforms, and increased public awareness.

The development of more sophisticated deepfake detection tools is essential. These tools can help platforms identify and remove fabricated videos before they can cause harm. However, it is important to ensure that these tools are accurate and reliable, as false positives could lead to the censorship of legitimate content.

Legal reforms are also needed to provide victims of deepfake abuse with greater protection. Laws that specifically criminalize the creation and distribution of non-consensual deepfakes can deter potential perpetrators and provide victims with a legal avenue for redress. However, it is important to ensure that these laws are carefully crafted to avoid infringing on freedom of speech.

Increased public awareness is also crucial. By educating people about the dangers of deepfakes, we can help them become more critical consumers of online content and less likely to fall victim to disinformation campaigns. Public awareness campaigns can also help to destigmatize deepfake abuse and encourage victims to come forward and report incidents.

The case of Mrdeepfakes serves as a cautionary tale about the potential for technology to be used for malicious purposes. As AI continues to evolve, it is essential that we develop ethical guidelines and regulatory frameworks to ensure that this powerful technology is used responsibly. The future of online content depends on it.

The concerns surrounding Mrdeepfake extend beyond the immediate victims of its content. The platform's existence normalized the concept of non-consensual deepfake pornography, desensitizing viewers to the ethical implications of creating and consuming such material. This normalization can have a chilling effect on society, eroding trust in institutions and individuals and contributing to a climate of online harassment and abuse.

The anonymity afforded by platforms like Mrdeepfake enabled a culture of impunity, where users felt free to upload and share non-consensual content without fear of reprisal. This anonymity also made it difficult to hold perpetrators accountable for their actions, further perpetuating the cycle of abuse.

The closure of Mrdeepfakes is a step in the right direction, but it is only a small victory in the larger battle against online exploitation and abuse. The underlying problems that fueled the site's popularity the demand for non-consensual content, the ease with which deepfakes can be created, and the lack of effective regulation remain largely unaddressed.

Moving forward, it is essential that we adopt a comprehensive approach to addressing the deepfake problem. This approach should include technological solutions, legal reforms, and educational initiatives. We must also foster a culture of online responsibility, where individuals are held accountable for their actions and platforms are proactive in preventing the spread of harmful content.

The challenge is not simply to shut down individual websites like Mrdeepfakes, but to create a safer and more ethical online environment for everyone. This requires a collective effort from technology companies, lawmakers, educators, and individual users. Only by working together can we hope to mitigate the risks posed by deepfakes and ensure that technology is used to empower, rather than exploit, individuals.

The individuals behind Mrdeepfakes operated anonymously for years, shielding themselves from accountability and scrutiny. This anonymity allowed them to profit from the exploitation of others without fear of legal or social consequences.

Earlier this year, however, a report by Spiegel identified a man named David D. as one of the individuals behind the site. According to the report, David D. was a 36-year-old living in Toronto who had previously worked in a hospital. He was also believed to have launched various other dubious websites.

The identification of David D. provides a glimpse into the individuals who are profiting from the deepfake industry. However, it is likely that there are many other individuals involved in the creation and distribution of non-consensual deepfakes who remain anonymous.

The closure of Mrdeepfakes highlights the need for greater transparency and accountability in the online world. Platforms should be required to verify the identities of their users and to take steps to prevent the creation and distribution of harmful content. Law enforcement agencies should also prioritize the investigation and prosecution of individuals who are involved in the deepfake industry.

In 2018, Reddit officially banned deepfake porn, recognizing the ethical and legal concerns associated with this type of content. This ban was a significant step in the fight against deepfake abuse, but it also highlighted the challenges involved in regulating online content. While Reddit's ban effectively removed deepfake porn from its platform, it did not prevent it from being hosted and distributed elsewhere on the internet.

The term deepfake refers to an image or video that was created with the aid of artificial intelligence (AI). GANs (Generative Adversarial Networks, a form of AI) can learn various characteristics of a target individual from existing images and videos. This information is then used to overlay the target's face onto another person's body, creating a seamless, albeit fabricated, video.

Detecting fake videos through artificial intelligence (AI) technology is an ongoing area of research. While the technology has diverse applications in art, science, and industry, its potential for malicious use in areas such as disinformation, identity fraud, and harassment has raised concerns about its dangerous implications.

While the technology has diverse applications in art, science, and industry, its potential for malicious use in areas such as disinformation, identity fraud, and harassment has raised concerns about its dangerous implications. These concerns have prompted calls for increased regulation and ethical guidelines to govern the development and use of deepfake technology. The closure of Mrdeepfakes underscores the urgency of addressing these challenges.

The shutdown of Mrdeepfakes is a landmark event in the ongoing saga of deepfake technology and its impact on society. It serves as a potent reminder of the ethical dilemmas and potential harms that can arise from unchecked technological advancement. As deepfakes continue to evolve and become more sophisticated, it is imperative that we develop effective strategies for mitigating their risks and protecting individuals from exploitation and abuse. Only through a concerted effort can we hope to navigate the complex landscape of deepfakes and ensure that this technology is used for good, rather than ill.

The website, employing a cartoon image that bore a resemblance to President Trump, added another layer of complexity to the ethical questions at hand. The use of a political figure, even in caricature, highlights the potential for deepfakes to be weaponized for political manipulation and disinformation campaigns.

Strg_f, a project jointly funded by German public broadcasters ARD and ZDF, and Dutch broadcasters, has been actively working to detect and expose deepfakes. Their efforts highlight the importance of investigative journalism and media literacy in combating the spread of misinformation.

Deepfakes became an open space for it. Users could upload videos and connect with creators to commission videos, creating a marketplace for deepfake content, both consensual and non-consensual. This accessibility further fueled the proliferation of deepfakes and made it more difficult to regulate the technology.

- Filmyfly More Your Guide To Free Movie Streaming 2024

- Filmyfly Movie Download Your Guide To Bollywood Hindi Dubbed Films

Moving Upstream Deepfake Videos Are Getting Real and That's a Problem

How Deepfake Videos Are Used to Spread Disinformation The New York Times

Deepfakes Why your Instagram photos, video could be vulnerable